Datasets for the 3D Semantic Segmentation to the Open World (3DOW) Challenge

Overview

-

This page provides datasets for the 3D Semantic Segmentation to the Open World (3DOW) Challenge , which are developed on the SemanticKITTI and SemanticPOSS dataset.

Instructions

- 1. Develop your model on the SubKITTI dataset.

2. Use your optimized model to obtain results on the AugKITTI dataset.

3. Submit your results in format on the page of 3DOW Challenge .

Label definition:

0-unknown, 1-car, 2-sign, 3-trunk, 4-plants, 5-pole, 6-fence, 7-building, 8-bike, 9-road.

In train dataset (SubKITTI) there are objects of label 0-9.

In test dataset (AugKITTI) there are unexperienced objects, which do not belong to any defined classes.

Download

Training dataset:

Test dataset:

Evaluation program: evaluate.py

Evaluation

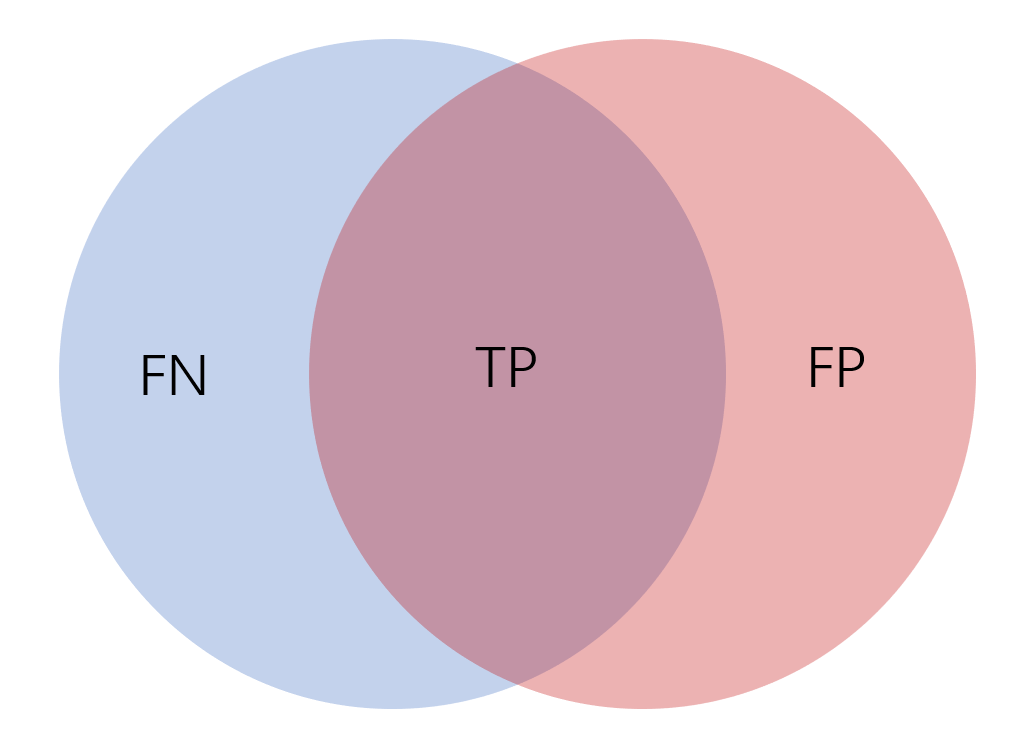

For 3D semantic segmentation tasks, we evaluate the outputs by:

IoU(Intersection over Union)=TP/(TP+FP+FN)

IoU of nine object categories will be evaluated respectively.

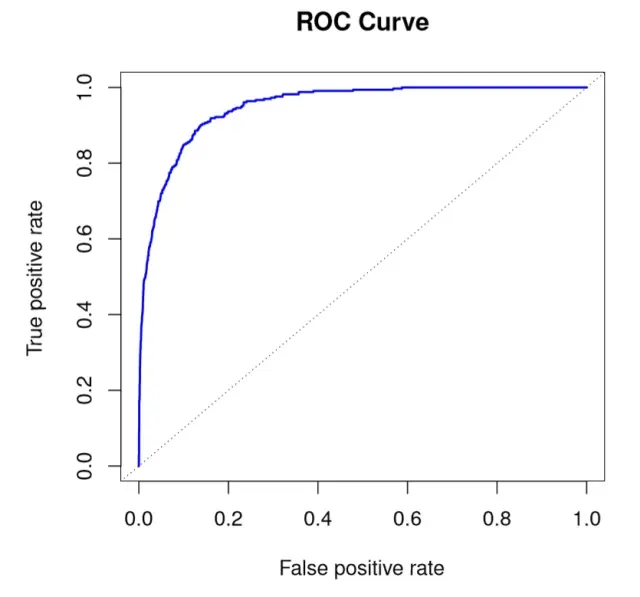

For OOD detection, we evaluate the confidence score by:

We also provide AUROC for each predictive classes, which reflects the reliability when model predicts the input data to the specific class. The AUROC will be evaluated among all data predicted into the specific class. However, there are two special case:

1. If none of the ID data is predicted into the specific class, the AUROC will be 0. (The predicted class will always be OOD)

2. If none of the OOD data is predicted into the specific class, the AUROC will be 1. (The predicted class will always be ID)

The range of the confidence is from 0 to 1,and threshold is uniformly sampled at intervals of 0.01.

Cite

- @inproceedings{behley2019arxiv,

author = {J. Behley and M. Garbade and A. Milioto and J. Quenzel and S. Behnke and C. Stachniss and J. Gall},

title = {{SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences}},

booktitle = {Proc. of the IEEE International Conf. on Computer Vision (ICCV)},

year = {2019}

}

- @inproceedings{pan2020semanticposs,

author={Pan, Yancheng and Gao, Biao and Mei, Jilin and Geng, Sibo and Li, Chengkun and Zhao, Huijing},

title={SemanticPOSS: A point cloud dataset with large quantity of dynamic instances},

booktitle={2020 IEEE Intelligent Vehicles Symposium (IV)},

pages={687--693}, year={2020}

}

- @inproceedings{geiger2012cvpr,

author = {A. Geiger and P. Lenz and R. Urtasun},

title = {{Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite}},

booktitle = {Proc.~of the IEEE Conf.~on Computer Vision and Pattern Recognition (CVPR)},

pages = {3354--3361},

year = {2012}

}

License

- This dataset follow Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License.